The concept of a limit of a sequence is further generalized to the concept of a limit of a topological net, and is closely related to limit and direct limit in category theory.In formulas, limit is usually abbreviated as lim as in lim(an) = a, and the fact of approaching a limit is represented by the right arrow (→) as in an → a.

Limit of a function

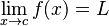

Augustin-Louis Cauchy in 1821, followed by Karl Weierstrass, formalized the definition of the limit of a function as the above definition, which became known as the (ε, δ)-definition of limit in the 19th century. The definition uses ε (the lowercase Greek letter epsilon) to represent a small positive number, so that "f(x) becomes arbitrarily close to L" means that f(x) eventually lies in the interval (L - ε, L + ε), which can also be written using the absolute value sign as |f(x) - L| < ε. The phrase "as x approaches c" then indicates that we refer to values of x whose distance from c is less than some positive number δ (the lower case Greek letter delta)—that is, values of x within either (c - δ, c) or (c, c + δ), which can be expressed with 0 < |x - c| < δ. The first inequality means that the distance between x and c is greater than 0 and that x ≠ c, while the second indicates that x is within distance δ of c.

Note that the above definition of a limit is true even if f(c) ≠ L. Indeed, the function f(x) need not even be defined at c.

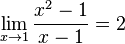

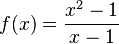

For example, if

| f(0.9) | f(0.99) | f(0.999) | f(1.0) | f(1.001) | f(1.01) | f(1.1) |

| 1.900 | 1.990 | 1.999 | ⇒ undefined ⇐ | 2.001 | 2.010 | 2.100 |

In other words,

In addition to limits at finite values, functions can also have limits at infinity. For example, consider

- f(100) = 1.9900

- f(1000) = 1.9990

- f(10000) = 1.99990

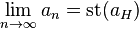

Limit of a sequence

Consider the following sequence: 1.79, 1.799, 1.7999,... It can be observed that the numbers are "approaching" 1.8, the limit of the sequence.Formally, suppose a1, a2, ... is a sequence of real numbers. It can be stated that the real number L is the limit of this sequence, namely:

- For every real number ε > 0, there exists a natural number n0 such that for all n > n0, |an − L| < ε.

The limit of a sequence and the limit of a function are closely related. On one hand, the limit as n goes to infinity of a sequence a(n) is simply the limit at infinity of a function defined on the natural numbers n. On the other hand, a limit L of a function f(x) as x goes to infinity, if it exists, is the same as the limit of any arbitrary sequence an that approaches L, and where an is never equal to L. Note that one such sequence would be L + 1/n.

Limit as standard part

In the context of a hyperreal enlargement of the number system, the limit of a sequence can be expressed as the standard part of the value

can be expressed as the standard part of the value  of the natural extension of the sequence at an infinite hypernatural index

of the natural extension of the sequence at an infinite hypernatural index  . Thus,

. Thus, .

.

![a=[a_n]](http://upload.wikimedia.org/math/e/3/1/e315162370c167afd21afca733e608a5.png) represented in the ultrapower construction by a Cauchy sequence

represented in the ultrapower construction by a Cauchy sequence  , is simply the limit of that sequence:

, is simply the limit of that sequence: .

.

Convergence and fixed point

A formal definition of convergence can be stated as follows. Suppose as

as  goes from

goes from  to

to  is a sequence that converges to a fixed point

is a sequence that converges to a fixed point  , with

, with  for all

for all  . If positive constants

. If positive constants  and

and  exist with

exist with as

as  goes from

goes from  to

to  converges to

converges to  of order

of order  , with asymptotic error constant

, with asymptotic error constant

Given a function

with a fixed point

with a fixed point  , there is a nice checklist for checking the convergence of p.

, there is a nice checklist for checking the convergence of p.- 1) First check that p is indeed a fixed point:

- 2) Check for linear convergence. Start by finding

. If....

. If....

|

then there is linear convergence |

|

series diverges |

|

then there is at least linear convergence and maybe something better, the expression should be checked for quadratic convergence |

- 3) If it is found that there is something better than linear the

expression should be checked for quadratic convergence. Start by finding

If....

If....

|

then there is quadratic convergence provided that  is continuous is continuous |

|

then there is something even better than quadratic convergence |

does not exist does not exist |

then there is convergence that is better than linear but still not quadratic |

0 komentar:

Posting Komentar